Welcome Sean Deighan

gartner + bloom, P.C. is pleased to welcome Associate, Sean Deighan, Esq. to our NYC Transportation team today. We are delighted to have you on the journey with us, Sean!! Warm welcome aboard 🚘🚖

Welcome Matt Hill

gartner + bloom, P.C. is pleased to welcome Senior Construction Defect Associate, Matt Hill to our Florida office today. We are thrilled to have you join the team, Matt!! Warm welcome aboard👏👏

Happy Holidays!

Multi-Million-Dollar Federal Court Subrogation Matter Settled

Congratulations to Senior Trial Counsel, Brian Franklin and Nicola Duffy on recently settling a multi-million-dollar federal court subrogation matter involving a massive fire at a girl’s camp in upstate New York. 👏👏

The fire broke out in the Camp’s commercial kitchen as cook staff left a large quantity of meat unattended on a char broiler, open flame.

The fire suppression system installed in the cooking hood allegedly failed to operate, and the fire spread to the roof, eventually causing the complete destruction of the commercial structure.

gartner + bloom, P.C. representing the installer of the fire suppression system, demonstrated through its team of experts that the fire’s spread was not the result of an inoperable suppression system, but rather defects in the commercial structure and the original installation of the hood.

The team further found evidence that the suppression system had been tampered with.

The matter was successfully resolved, for a fraction of the original demand, saving the client from a large potential exposure.

Cheers to Brian Franklin & Nicola Duffy on delivering a phenomenal outcome for our client🥂💜🥂

Congrats to New Partner Peter Rosenberg, Esq.

gartner + bloom, P.C. is pleased to celebrate the recent promotion of Peter Rosenberg to Partner. Peter has been a formidable litigator who delivers impressive outcomes for clients in our transportation practice. We look very much forward to your continued success and working with you for decades to come. Cheers to you, Peter! 👏👏👏

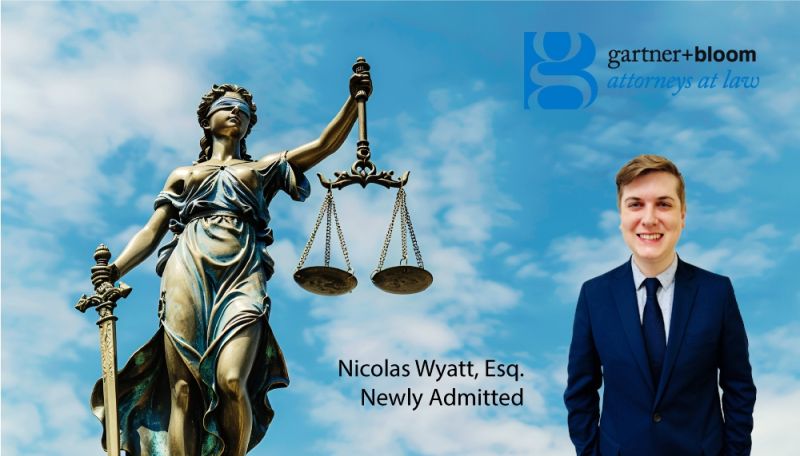

Congrats Nicolas A. Wyatt, Esq.

gartner + bloom, P.C. proudly celebrates and extends a warm congratulations to Nicolas A. Wyatt, Esq. on his recent induction to the Bar. Cheers to you, Nicolas🥂from all of us on this incredible milestone👏👏👏